Data has become the primary asset for modern enterprises, yet managing the sheer volume of it is an increasing challenge. As workloads expand from terabytes to petabytes, traditional infrastructure often creates bottlenecks. This is where hyperscale computing emerges as a critical component in the digital transformation process.

Below is an exploration of what hyperscale computing entails, its infrastructural backbone, and how leading organizations utilize this technology to drive growth.

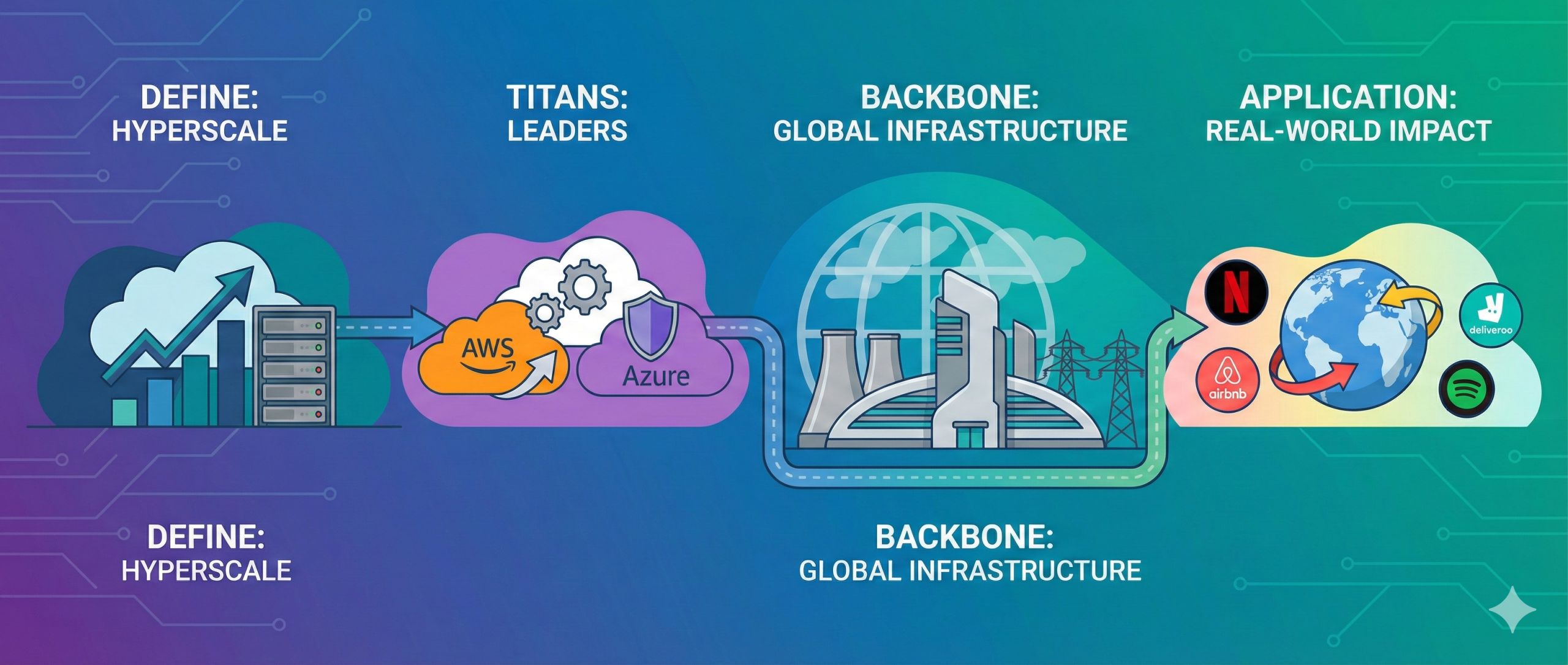

Defining Hyperscale Computing

In the context of cloud infrastructure, hyperscale represents a distinct paradigm shift. It refers to a system’s ability to expand computational and storage resources exponentially—and dynamically—through a distributed, horizontally scalable architecture.

Unlike traditional setups that often require physical hardware upgrades to scale (“scaling up”), hyperscale environments “scale out.” They provision and de-provision resources rapidly to meet the fluctuating demands of data-intensive workloads. This architecture allows service providers to manage massive data volumes with high levels of automation and cost-efficiency.

The Strategic Value of Hyperscale

The defining characteristic of a hyperscaler is not merely size, but agility.

- Flexibility: It enables businesses to respond instantly to market shifts, scaling resources up or down as needed to optimize costs.

- Security: Major providers offer advanced security management systems that are often beyond the reach of individual on-premise data centers.

- Innovation: By offloading infrastructure management, organizations can focus resources on core business objectives and innovation rather than hardware maintenance.

The Market Leaders: AWS and Azure

While the sector includes several key players, Amazon Web Services (AWS) and Microsoft Azure are frequently the primary options for enterprise-grade hyperscale.

- AWS (Amazon Web Services): Known for its extensive service catalog and vast global footprint, AWS provides robust tools for virtually every use case, from basic storage to complex machine learning.

- Microsoft Azure: Azure often distinguishes itself through seamless integration with existing Microsoft ecosystems, making it a logical choice for enterprises already reliant on Microsoft software.

Both providers utilize their immense scale to offer reliability and reach that smaller, private cloud implementations struggle to match.

The Backbone: Hyperscale Data Centers

Hyperscale computing relies on massive physical facilities designed for maximum efficiency and minimal human intervention. These data centers utilize advanced cooling systems, redundant power supplies, and optimized physical layouts to support the heavy lifting of the modern internet.

Strategically located to minimize latency, some of the most notable facilities include:

- Northern Virginia (AWS): A central hub for internet traffic in the United States, offering extensive connectivity.

- The Dalles, Oregon (Google): A massive complex supporting data-heavy services like YouTube and Search.

- Quincy, Washington (Microsoft): A key facility powering the rapid expansion of Azure’s global services.

- Iowa (Meta/Facebook): A facility noted for its energy efficiency, supporting global social networking activity.

Essential Elements of the Ecosystem

Hyperscale environments serve as incubators for advanced technologies, providing the raw compute power necessary for:

- AI and Machine Learning: Training complex models requires vast resources. Hyperscale clouds allow organizations to automate processes and extract insights from large datasets without purchasing expensive hardware.

- Serverless Computing: This model abstracts the infrastructure layer entirely, allowing developers to build and deploy applications without managing servers, thereby improving operational efficiency.

- Managed Services: Providers offer turnkey solutions for database management, security monitoring, and network optimization, reducing the operational burden on internal IT teams.

Strategic Adoption: What Belongs in the Cloud?

While the potential of hyperscale is significant, migration requires careful planning. Not every workload is immediately suitable for a public cloud environment.

Decision-makers typically evaluate data sensitivity, scalability requirements, and cost implications. Generally, data-intensive tasks and enterprise applications with fluctuating demand are the strongest candidates for migration, allowing companies to balance performance needs with financial prudence.

Real-World Application

The efficacy of the hyperscale model is best illustrated by the companies that rely on it to function at a global scale:

- Netflix: Utilizes hyperscale infrastructure to deliver seamless streaming to millions of concurrent users, scaling dynamically during peak viewing hours.

- Airbnb: Leverages the cloud to manage fluctuating booking demands and real-time customer service interactions globally.

- Deliveroo: Relies on high-availability infrastructure to coordinate real-time logistics between restaurants, riders, and customers.

- Spotify: Manages immense libraries of audio content and user data to provide personalized recommendations with low latency.

By providing global reach, stringent security, and on-demand scalability, hyperscale computing has established itself as the foundation for the next generation of digital business.