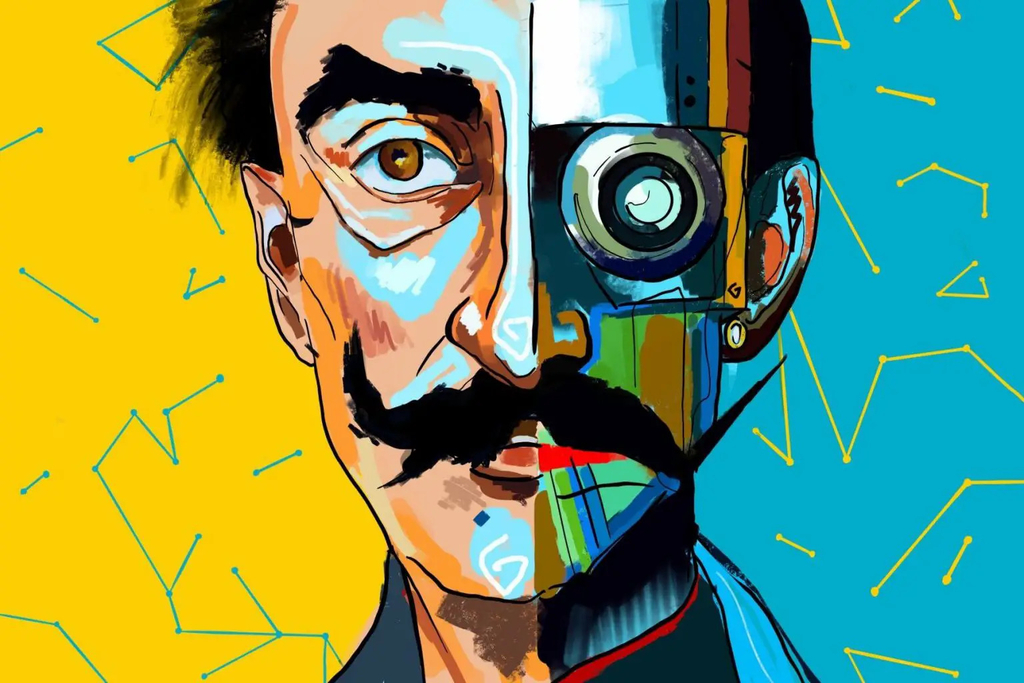

DALL-E, a compelling Artificial intelligence (AI) creation developed by OpenAI, has taken the world of image generation by storm. The name is a portmanteau of the names of the animated robot Pixar character WALL-E and the Spanish surrealist artist Salvador Dalí. This technology allows users to transform written descriptions into stunning, almost lifelike visuals seamlessly. The secret behind DALL-E’s phenomenal success is the fusion of two cutting-edge AI techs: GPTs and diffusion models. The symbiotic relationship between these two paradigms is the foundation of DALL-E’s ability to interpret even the most complex linguistic cues and translate them into captivating visual narratives. DALL-E creates images by analyzing vast amounts of textual data that are not only visually stunning but also tell a story. This AI creation is remarkable, and its impact on image generation cannot be overstated.

Text to Image: GPTs at Play

DALL-E’s remarkable image generation system can be attributed to the immense power of generative pre-trained transformers (GPTs). These advanced AI models are trained on vast amounts of text and code, enabling them to produce almost indistinguishable text from human language, with all its complexities and nuances. The secret to GPTs’ success is pre-training, which involves exposing the model to massive amounts of data to learn the underlying patterns and relationships within language. This gives GPTs the ability to decode and understand complex textual descriptions, which form the foundation of DALL-E’s visual creations.

GPTs connect the user’s imagination with DALL-E’s visual output. When a user inputs a textual description, GPTs analyze the language’s semantics, syntax, and context, capturing the essence of the desired imagery. A comprehensive understanding of GPTs’ language enables them to generate highly accurate and visually stimulating text descriptions. These descriptions are the guiding input for diffusion models, which work with GPTs to power DALL-E’s image-generation capabilities.

Diffusion models use the textual descriptions generated by GPTs to create images faithful to the user’s input. The models use diffusion, which involves iteratively refining the image until it meets the desired criteria. This process is made possible by diffusion models’ high-dimensional probability distribution, allowing them to generate images with complex and realistic details.

Noise to Narrative: The Artistry of DALL-E’s Diffusion Model

Another component behind the DALL-E is the diffusion model. A diffusion model is a deep neural network that can generate data similar to the data it’s trained on. They work by adding noise to training data and then learning to remove it to recover the data. Diffusion models are exceptionally skilled at generating images from textual descriptions. The process starts with a clean image, which is gradually distorted by adding noise until it becomes indistinguishable from random noise. This noisy image is fed into the diffusion model, eliminating the noise and revealing the desired image. The model uses a series of neural networks, each trained to identify and eliminate specific noise patterns. The image becomes increasingly refined as the model moves through these networks, until the noise is replaced by details consistent with the textual descriptions.

The synergy between GPTs and diffusion models makes DALL-E’s image generation capabilities remarkable. GPTs provide the textual descriptions that guide the diffusion model in generating the images. The iterative process between the two models allows DALL-E to capture intricate details and produce high-quality images that accurately reflect the user’s imagination. This partnership between language and vision opens up new possibilities for creative expression and has the potential to revolutionize the fields of art and design.

Precision Partners: GPTs and Diffusion Models Shaping DALL-E’s Images

DALL-E’s impressive image generation capabilities are based on the intricate interplay between two distinct types of AI: GPTs and Diffusion Models. These two AI components complement each other flawlessly, creating a seamless process that converts textual descriptions into stunning visual creations with intricate details.

GPTs provide detailed instructions to the diffusion models, which subsequently utilize neural networks to identify and remove specific noise patterns from the image. As the image progresses through these networks, it undergoes a gradual denoising process, with the noise being replaced by details that remain consistent with the original textual descriptions. This iterative refinement ensures that even the most complex visual elements are captured and transformed into high-quality images.

The collaboration between GPTs and diffusion models creates a mutually beneficial partnership that elevates DALL-E to the forefront of image generation technology. GPTs provide the linguistic understanding and contextual insights that diffusion models require to interpret and translate textual descriptions into visual representations accurately. Conversely, the diffusion models bring their powerful image-generating capabilities to life, transforming the instructions from the GPTs into stunning visual creations that accurately reflect the original text.

This symbiotic relationship goes beyond simple collaboration; it is a complex and nuanced dance of mutual enhancement. The GPTs refine their understanding of language and its relationship to imagery thanks to the visual feedback provided by the diffusion models. Similarly, the diffusion models benefit from the context and insights the GPTs offer, enabling them to produce images with even greater precision and fidelity.

Unleashing Potential: the Future of DALL-E

The collaboration between GPTs and diffusion models in DALL-E offers limitless possibilities for further integration and advancement. We can unlock even more transformative applications for this groundbreaking AI tool by enhancing the capabilities of each component and deepening their collaboration.

One promising development area is incorporating more sophisticated natural language processing (NLP) techniques into GPTs. This could enable GPTs to extract more profound meaning and nuances from textual descriptions, capturing subtle emotional cues and implicit references. With a richer understanding of the user’s intent, GPTs could generate even more contextually appropriate reports, providing more precise guidance for diffusion models.

Simultaneously, refining diffusion models could generate even more realistic and detailed images. Advanced diffusion models could be trained on more extensive and diverse datasets, enabling them to capture a broader range of visual patterns and styles. Incorporating techniques from generative adversarial networks (GANs) could further enhance the fidelity of the generated images, making them virtually indistinguishable from real photographs.

These advancements in GPTs and diffusion models would propel DALL-E to new heights, transforming it into an indispensable tool across various fields. DALL-E could empower artists and designers to bring their creative visions to life with unprecedented ease and precision in art and design. Imagine conceptualizing an artwork or design simply by describing it in text and having DALL-E produce a stunning visual representation that captures every detail and nuance of your imagination.

Similarly, DALL-E could revolutionize how we convey information and ideas in visual communication. Imagine generating compelling infographics, presentations, and educational materials simply by providing text descriptions of the desired visuals. DALL-E also empowers individuals with limited artistic or design skills to create impactful visuals for personal or professional projects.

Ending Thoughts

DALL-E’s fusion of GPTs and diffusion models has revolutionized image generation. GPTs decode complex textual descriptions, providing the foundation for DALL-E’s stunning visual output. The collaboration between GPTs and diffusion models creates a nuanced dance of mutual enhancement, simultaneously refining language understanding and imagery. Further integration and advancements in both components could enhance DALL-E’s transformative applications. Improved natural language processing for GPTs could capture nuanced cues, while refined diffusion models might generate even more realistic images. This potential evolution positions DALL-E as an indispensable tool, enabling easy and precise realization of creative visions and transforming visual communication across various fields. The journey from the current synergy to future advancements represents a significant stride in AI’s capacity to redefine visual creativity and communication.